Clear, thoughtful policies are the foundation for using AI responsibly and maintaining trust across teams.

For HR leaders, navigating AI adoption means balancing innovation with accountability. From differing regulations across jurisdictions to addressing workforce impacts, creating a framework that adapts to evolving needs is key.

In this guide, we’ll explore how your team can ensure compliance with an effective AI policy for your company and offer a free AI policy template to give you a head start.

<<To create your own effective AI policy, download our AI policy checklist>>

Why you need an AI policy: Keeping pace with AI compliance legislation

Artificial intelligence (AI) is reshaping how companies operate, offering exciting possibilities alongside unique challenges. Almost 80 percent of organizations now use AI in at least one business function, up from 72 percent in early 2024 and 55 percent a year earlier.

However, with little precedent set for how to deal with this tech, HR departments find themselves in the unique position of being able to craft comprehensive guidelines on the practicalities of AI usage from a relatively blank page.

But as the burgeoning realm of artificial intelligence legislation continues to grow, continued compliance will be another matter entirely. New laws, discussions, and frameworks are in the works to ensure AI advances remain ethical, controlled, and ultimately beneficial. For multi-national teams, legislation can be even more complex. For example, the United States will have one set of stringent rules, while the United Kingdom, Australia, or Israel will each have entirely different sets.

This complicates the process of creating an ever-evolving company policy but also highlights the increasing need for such a policy to be in place. For HR, staying updated on these legislative changes isn’t optional—it’s essential. Ensuring compliance across multiple jurisdictions is a process that requires continuous learning and adaptation.

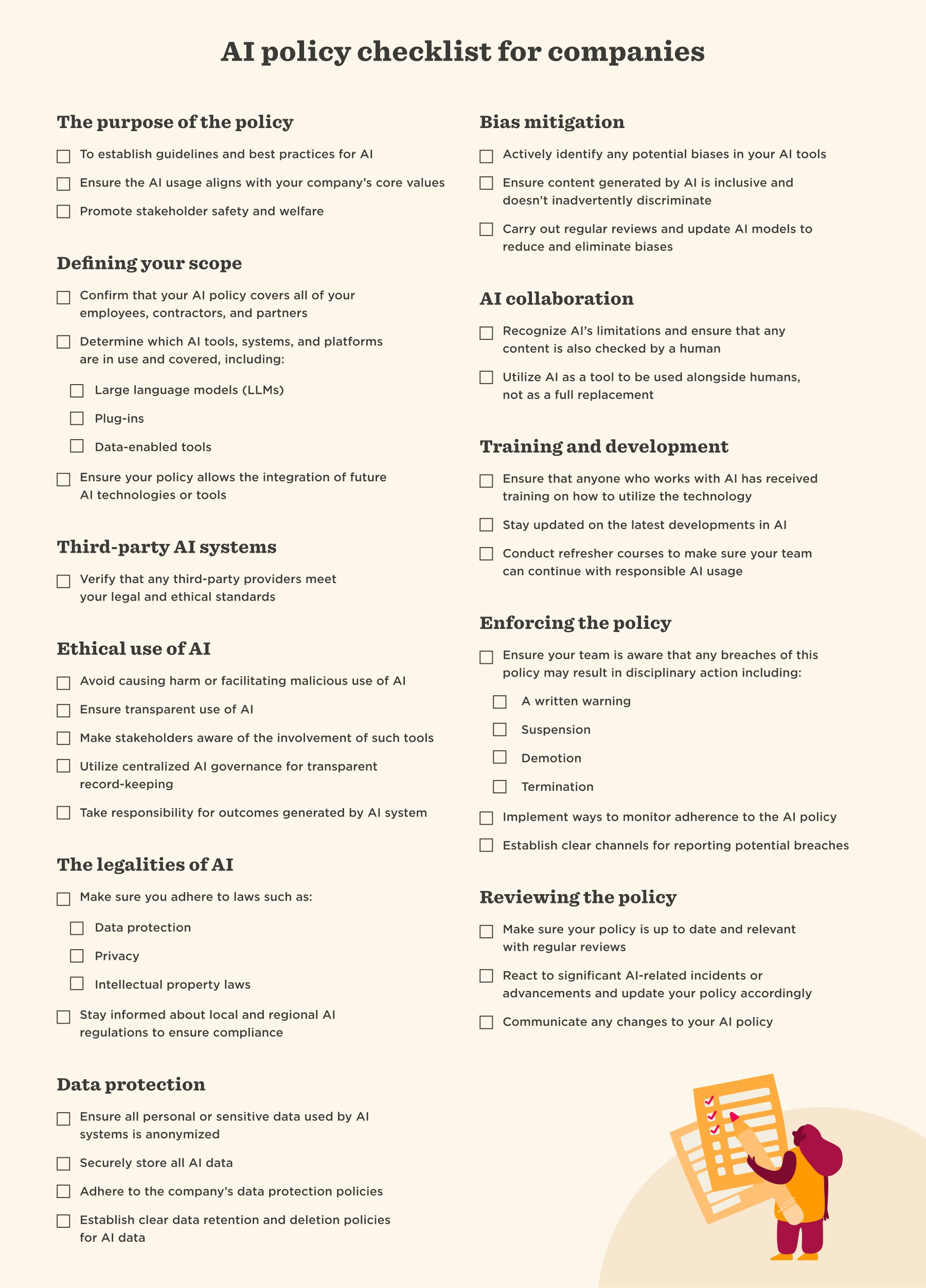

AI policy checklist for companies

We’ve created this AI policy template checklist to serve as a condensed guide to help you create your own policy or to make sure yours is up to date. This checklist provides a quick overview of everything you’ll need to consider to ensure you maintain the highest standards of integrity, safety, and transparency. Then, we go into more detail in sections below.

<<To create your own effective AI policy, download our AI policy checklist>>

How to write an AI policy

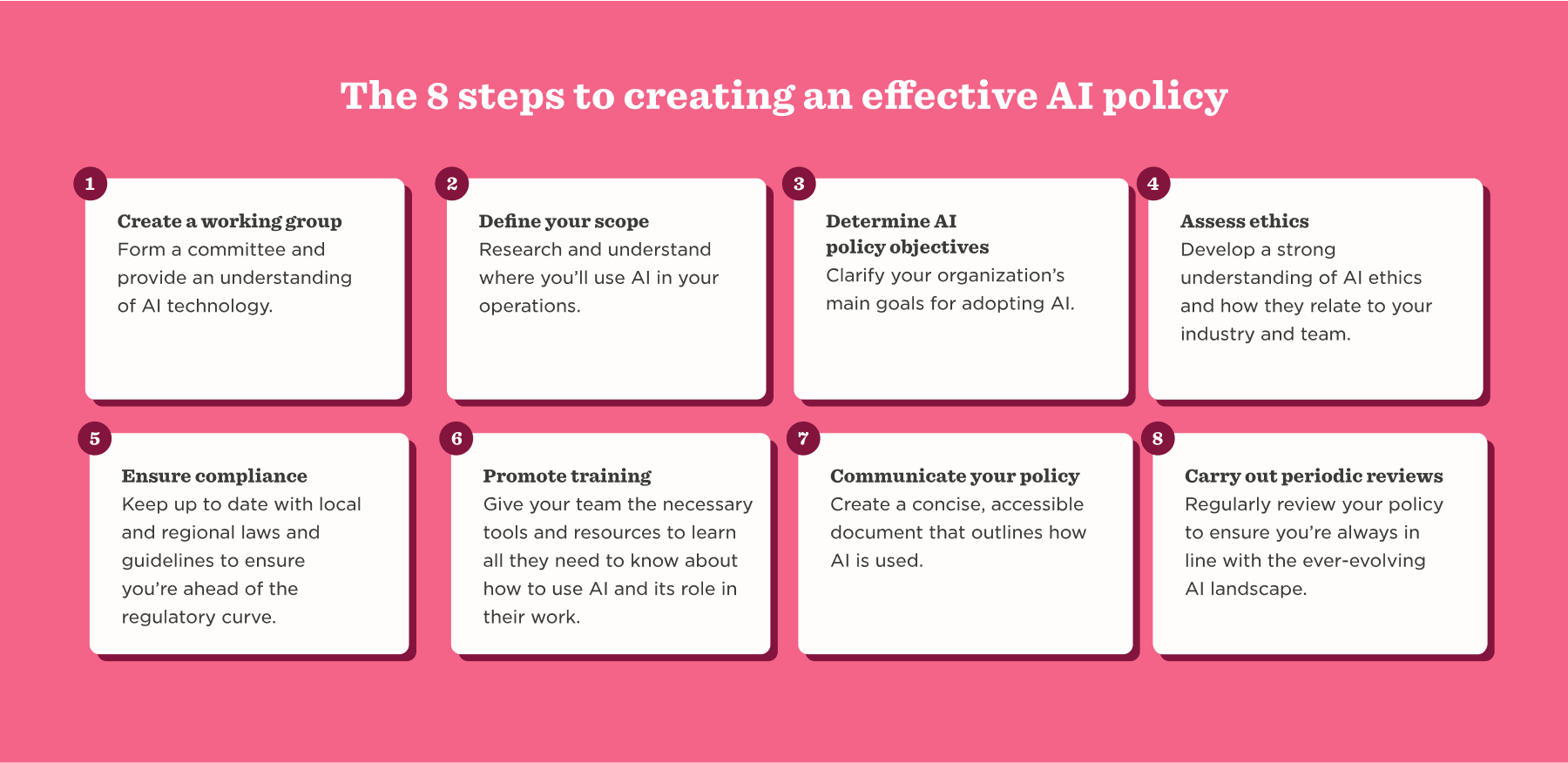

When creating your own AI policy, it’s important to take a structured approach and set a clear direction. Here are key steps to help steer you through the process:

1. Create a working group and provide baseline AI education

Form a committee that includes board members, executives, stakeholders, and other key contributors to develop the AI policy. Equip this group with a solid understanding of AI and its ethical implications through training sessions, workshops, and more.

2. Define your scope and boundaries

Start by identifying the areas where AI can add the most value within your operations—from automating routine tasks to enhancing data-driven decision-making. Once these opportunities are clear, set thoughtful boundaries that guide how your team engages with AI. This ensures a balanced integration that leverages the strengths of both artificial and human intelligence, encouraging innovation while maintaining trust and transparency.

3. Determine AI policy objectives for the company

Clarify your organization’s main goals for adopting AI. Are you aiming to boost efficiency, enhance customer experience, or drive innovation? Your policy should outline the types of AI allowed, detailing specific models and tools and any permissions needed for particular applications.

4. Assess ethical implications and AI risks for your organization

Assess the ethical implications of AI within your organization by developing a strong understanding of AI ethics and how they relate to your industry and workforce. Evaluate how AI tools may influence diversity, inclusivity, and fairness across teams and processes. Aligning your policies with ethical best practices helps promote responsible AI adoption, reflects your company’s values, and supports long-term trust.

You can also determine potential AI risks by having your internal AI or ethics committee assess each use case thoroughly. Encourage discussions around known challenges such as algorithmic bias, data privacy concerns, security vulnerabilities, and unintended outcomes that may affect users or stakeholders.

Identifying these risks early allows your team to propose targeted mitigation strategies—whether that involves refining data inputs, improving model transparency, or setting usage limits—ensuring AI tools are implemented responsibly and effectively.

5. Evaluate AI laws and regulations to ensure compliance

Stay up-to-date with local and regional laws and guidelines—such as the European Union’s Artificial Intelligence Act, the United Kingdom’s AI Regulation White Paper, and the United States’ NIST AI Risk Management Framework (NIST AI RMF)—to ensure ongoing compliance. Understanding how these evolving frameworks apply to your operations helps you anticipate changes, adjust your policies, and demonstrate a commitment to responsible AI use.

Taking this proactive approach positions your organization as a leader in the AI regulatory landscape and builds stakeholder trust.

6. Develop AI training procedures for team members

Develop programs and tools to provide AI skills training so your team understands how to use AI responsibly and effectively in their roles. Equip professionals with practical tools and resources that explain AI’s capabilities, its limitations, and the ethical implications of its use.

Training should include real-world examples, guidance on data privacy and fairness, and clear protocols for when human oversight is required. This foundational knowledge empowers your team to make informed decisions and build trust in AI-driven processes.

7. Communicate your AI policy and promote transparency

Communicate your AI policy clearly and consistently across your organization. Create a concise, accessible document that outlines how AI is used, what data it relies on, and the principles guiding its implementation. Share the policy through internal channels like onboarding materialsall-hands meetings, and your company handbook to ensure broad awareness.

Emphasize transparency around AI decision-making, data usage, and accountability to build trust and encourage open dialogue across teams.

8. Carry out periodic AI audits

Regularly track AI usage to ensure compliance with your policy. Review your policy periodically to keep pace with the ever-changing AI landscape, adapting to new challenges and opportunities.

<< To create your own effective AI policy, download our AI policy checklist. >>

AI policy template

Crafting an AI policy is like setting the ground rules for a game. It’s all about making sure everyone knows how to play fairly and responsibly.

Here’s what your AI policy should cover:

Purpose

This section explains why the policy exists and what it aims to achieve. It sets the tone for ethical, responsible AI use and reinforces your commitment to innovation balanced with accountability. Make sure this section:

- Declares the intent to use AI in a way that enhances business efficiency, supports people, and builds customer trust

- Emphasizes responsible innovation and data stewardship as key priorities

- Reinforces alignment with company mission, values, and stakeholder expectations

Scope

Clarify who the policy applies to and which technologies it covers to ensure that expectations are clearly set across departments and jurisdictions. Be sure to include:

- Applicable business units (e.g., HR, product, legal, marketing)

- Covered technologies: machine learning, generative AI, predictive analytics, etc

- Jurisdictions the policy aligns with, such as those for the EU, UK, or US

- Specifications of both in-house and third-party AI tools allowed

Policy

Lay out the core guidelines for AI use. Aligning these policies with your core values helps ensure AI use supports your mission. This section should:

- Approve use cases like internal productivity tools, content summarization, and customer support automation

- Prohibit uses that involve surveillance, sensitive biometric data, or decisions without human input

- Require a use case approval checklist before adoption

- Ensure all AI projects document business purpose and risk level

Legal compliance

Maintaining compliance with AI laws protects your organization from legal and reputational risks. This section outlines your obligations and how they’re managed and helps you:

- Stay compliant with the EU AI Act, UK AI White Paper, US NIST AI RMF, and applicable privacy laws like the EU’s General Data Protection Regulation (GDPR)

- Designate a compliance lead or officer to track regulatory changes.

- Implement annual audits of all AI systems and practices.

- Include legal review in the procurement process for new AI tools.

Transparency and accountability

Transparency builds trust in AI systems. This section defines how decisions are documented and who is responsible for outcomes, including:

- Requiring documentation of data sources, model assumptions, and decision logic

- Assigning AI system owners within each team

- Storing audit logs for all critical AI-driven decisions

- Creating a dashboard or repository for policy visibility and updates

Data privacy/security

AI systems must handle personal and sensitive data with care. This section outlines how privacy is protected and data breaches are managed. In this section:

- Mandate data minimization and anonymization wherever feasible

- Describe how to encrypt all personal data used in AI models

- Explain expectations for conducting penetration tests and vulnerability scans for AI systems

- Develop a breach response playbook with timelines and responsibilities

Bias and fairness

Address the need to tackle bias in AI systems by:

- Requiring the use of inclusive, representative datasets in model training

- Applying bias detection tools during development and post-launch

- Setting fairness KPIs (e.g., demographic parity, equal opportunity)

- Involving diverse reviewers in AI testing and audits

Human-AI collaboration

AI should complement—not replace—human judgment. Highlight the importance of keeping human oversight in AI processes and describe how AI complements human roles for the best results. This section should:

- Identify decisions requiring mandatory human review (e.g., hiring, termination, credit approvals)

- Build workflows with “human-in-the-loop” checkpoints

- Empower staff to challenge or override AI outputs

- Train managers on oversight responsibilities and escalation procedures

Training and education

To use AI responsibly, people must understand it. Provide plans for AI education and training, share resources for learning about AI, and promote a culture of continuous learning. This section can:

- Deliver onboarding modules covering AI basics and policy overview

- Offer quarterly workshops on ethics, compliance, and tool-specific training

- Publish curated learning paths (e.g., Coursera, LinkedIn Learning, internal wiki)

- Include scenario-based training to highlight practical risks and responses

Third-party services

Set guidelines for using third-party AI services by:

- Requiring vendors to disclose model architecture, training data, and bias mitigation strategies

- Including contract clauses for ethics, security, and compliance standards

- Reviewing third-party tools annually for ongoing alignment

- Maintaining a vetted vendor list approved by compliance or legal teams

Implementation

Finally, outline the steps for implementing, enforcing, and updating the policy. This section of your policy can include:

- Guidelines for publishing the policy in an internal knowledge hub and notify all teams

- Assignments of team members to a cross-functional AI governance committee for oversight

- Schedules for biannual reviews and update cycles

- Defined feedback channels for team members to raise concerns or suggest changes

<<To create your own effective AI policy, use our free template. >>

How to approach AI challenges in HR

The integration of AI into the corporate sphere is not without its challenges. For HR professionals, these challenges can cover several different areas:

- Recruitment. Recruitment has always been about finding the crème de la crème of talent and funneling them further down your hiring process. But what do we do when potential recruits have the power of AI at their fingertips? What about when CVs and cover letters are AI-generated or home tests are completed with the help of bots? Is this cheating? Or is this a case of ingenuity and strategically using the tools at your disposal?

- On-the-job usage. For some, AI tools are productivity boosters. They help streamline tasks and promote efficiency. Others use AI out of necessity, and there can be questions of laziness or dependency. This makes establishing a clear rule about AI usage a priority.

- Income supplementing. Since AI usage has become mainstream, there have been reports of people holding down multiple “full-time” jobs because generative AI tools like ChatGPT have made them incredibly productive. While some may argue that this already falls under the category of a conflict-of-interest policy, others may see this as a novel issue that needs addressing.

In the face of challenges such as these, ambiguity can’t creep into your processes. Each of these challenges warrants a clear company policy that outlines the dos and don’ts of AI usage in the workplace. This way, everyone can be on the same page, and even well-intentioned misuse of AI can be controlled and regulated.

Understanding basic AI concepts and risks

Here are some basic AI risks that can help inform your policy:

- False information. While AI is a powerful source of knowledge, it can still be prone to churning out inaccurate, misleading, or downright incorrect information.

- Algorithmic bias. AI systems can unintentionally reflect or amplify biases present in their training data, which may lead to decisions that unfairly disadvantage certain groups. Regularly assessing can help mitigate these biases.

- Plagiarism. AI can generate content, but it cannot generate unique content. Instead, it uses preexisting source material, leading to the possibility of unintentional plagiarism.

- Copyright infringement. In a similar vein to the plagiarism issue, there’s concern around AI inadvertently using copyrighted material.

- Privacy and data protection. With AI tools accessing large data sets and no strict rules around data protection, there are worries about the confidentiality of intellectual property and sensitive data.

- Ethical AI decision-making. Ensuring AI systems make decisions that align with ethical standards helps maintain fairness and accountability in business operations.

- Societal impact. The deployment of AI can affect employment patterns and social structures. Companies should consider these implications and strive for solutions that benefit society at large.

- Local/regional AI compliance. Depending on where you are in the world, there are different and ever-evolving rules regarding AI. This makes it complicated to ensure your operations remain above board and compliant with local and regional regulations.

- Monitoring, accountability, and incident response. The use of AI is difficult to monitor, making it complicated to set up and keep track of violations and data breaches.

AI policy examples

Looking for a place to start with your AI policy? Take a cue from industry leaders who’ve crafted robust guidelines. These companies have set the bar high with their comprehensive AI policies, offering a solid foundation you can build on:

- Microsoft: Microsoft’s AI policy is grounded in six core principles: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. These principles guide the development and deployment of AI systems to ensure ethical and responsible use. Microsoft has implemented a Responsible AI standard that provides actionable guidelines for teams to uphold these principles in practice.

- Google: Google’s AI principles emphasize the development of AI that is socially responsible, avoids creating or reinforcing unfair bias, is built and tested for safety, is accountable to people, incorporates privacy design principles, upholds high standards of scientific excellence, and is made available for uses that accord with these principles.

- Walmart: Walmart has articulated a Responsible AI Pledge centered around six commitments: transparency, security, privacy, fairness, accountability, and customer-centricity. This pledge reflects Walmart’s dedication to using AI in ways that are ethical, safe, and beneficial to customers, members, and associates.

- Cigna: Cigna’s approach to AI is guided by principles of transparency, accountability, and safety. The company emphasizes the ethical use of AI to benefit stakeholders, including customers, clients, providers, and employees. Cigna has established a cross-functional group to assess new AI use cases and ensure they align with these ethical principles.

- Wells Fargo: Wells Fargo focuses on building responsible AI systems that are transparent, explainable, and compliant with regulatory standards. The bank emphasizes the importance of offering alternatives to AI-based services to accommodate customer preferences and ensure trust. Wells Fargo’s AI initiatives aim to enhance customer experiences while maintaining ethical and regulatory compliance.

The delicate balance between artificial and human intelligence

There is a fine line between the convenience and danger of AI. For HR leaders, the mission is clear: Harness the power and potential of AI while crafting guidelines that protect the interests of both your organization and your people.

Because robust AI policies aren’t just about responding to technological evolution—they’re also a statement that signals your readiness to embrace the future with clarity, conviction, and compassion.

AI company policy FAQs

Do companies need a dedicated team to monitor AI use?

While it’s not required, having a dedicated team to oversee AI use is a smart move. This group can keep an eye on AI integration, ensure policies are followed, and quickly tackle any issues that pop up. With a focused approach, you can stay ahead of challenges and take swift action when needed, ultimately protecting your organization’s interests.

Do I need an AI expert to develop an artificial intelligence use policy?

Having an AI expert on board is helpful, but it’s not a must. An expert can offer valuable insights into the tech details and potential pitfalls. However, a diverse team that includes HR professionals, legal advisors, and IT specialists can create a well-rounded policy. This collaborative effort ensures your policy is thorough and aligns with company goals.

What should teams do if AI tools produce incorrect or biased results?

If AI tools give skewed results, quick action is key. First, figure out why the error happened. Check the data inputs, algorithms, and processes involved. Once you know the cause, make the necessary adjustments—like refining algorithms or updating data sets—to fix the issue and prevent it from happening again. Having a clear protocol for these situations builds trust in your AI systems and helps you avoid further problems.

Recommended For Further Reading

What are some disciplinary actions for AI misuse?

Disciplinary actions for AI misuse should match the severity of the issue. For minor problems, a verbal warning or extra training may be enough. Serious violations, like data privacy breaches, might call for written warnings, suspension, or even separation. Clearly stating these consequences in your AI policy helps ensure everyone understands the importance of responsible AI use.

What is a good AI policy?

A good AI policy is clear, comprehensive, and adaptable. It should outline acceptable AI use, address ethical concerns, and comply with relevant laws. Effective policies encourage transparency and accountability while supporting continuous learning. Regular reviews keep the policy up-to-date with tech advances and legal changes, ensuring it remains a valuable tool for your organization.