AI is reshaping every facet of work across the majority of industries.

It’s changing how teams operate every day. From hiring and onboarding to productivity and performance management, AI is now embedded into the flow of work.

But as innovation accelerates, regulation is racing to keep up.

Every major region is taking its own approach to AI governance, and global companies can no longer afford to wait and see. Compliance missteps can lead to fines or data exposure, but the real cost is reputational damage and losing the trust of your people, customers, and stakeholders.

The EU AI Act is in the midst of its tiered rollout, state-level legislation is coming online in the United States, and Australia is drafting a future-facing policy—all big changes in the global sphere.

Together, these policies are shaping global norms.

Meanwhile, new regulations around privacy, surveillance, and algorithmic transparency are gaining momentum across sectors.

These changes carry ripple effects across the business:

- HR teams play a critical role in ensuring fair, bias-free hiring while safeguarding talent pipelines against deepfakes and fraudulent applications. They’re also crucial to supporting safe, compliant AI use in performance reviews, onboarding, and internal mobility workflows.

- IT leaders lead vendor oversight, data governance, and keep AI-powered systems aligned with evolving audit and security standards across all tools and regions.

- Executives and legal teams are responsible for aligning business goals with region-specific AI laws, managing reputational and financial risk, and guiding company-wide policies on how AI is used within the flow of work across a global workforce.

In this guide, we’ll break down AI regulation by region and provide actionable steps you can take to monitor legal changes, protect your people and tech, and operationalize compliance without stifling innovation.

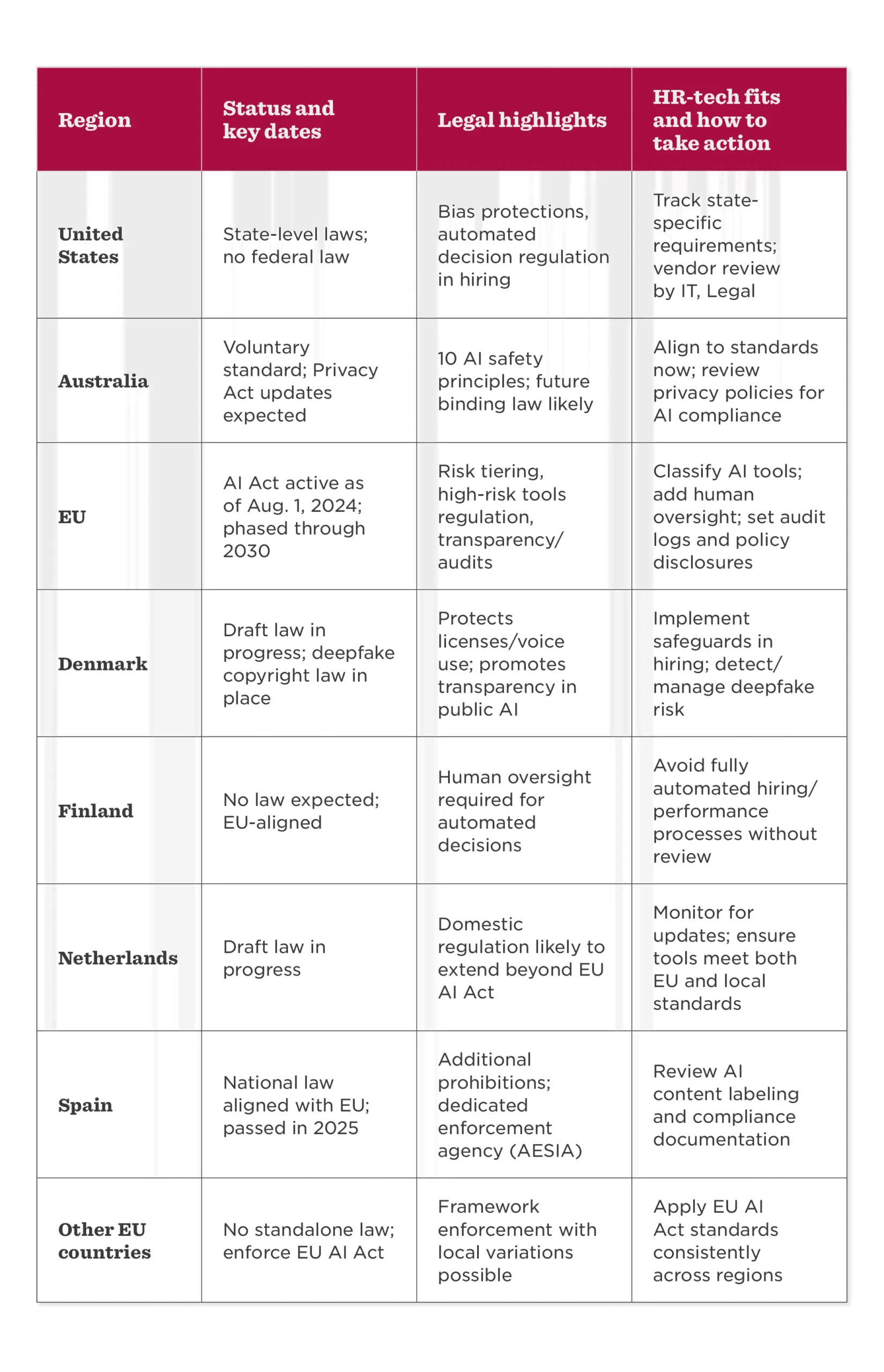

AI regulation around the world: What to know by region

AI innovation may be global, but AI regulation is still regional.

Every country (and state in the US) is taking a different approach.

Some are moving quickly with detailed frameworks and enforcement plans, while others are introducing voluntary codes or relying on broader data protection laws.

The result is a patchwork of obligations that creates complexity for global companies, especially when standardizing ethical AI use across HR, IT, Legal, and Compliance functions.

Here’s what to watch in the regions most relevant to global employers today:

The United States

The US still has no federal AI law, but that doesn’t mean it’s a free-for-all.

A wave of state-level legislation went live in 2025.

Texas, California, Colorado, and Utah have already introduced rules. Most of these focus on protecting people from algorithmic bias and keeping employment-related decisions fair.

For HR, IT, and procurement, this adds complexity.

Recruitment, screening, and performance management tools now need to be vetted against multiple legal standards. IT and procurement teams also need to deepen due diligence on vendors to confirm system compliance everywhere they operate.

Fragmentation is the main challenge here.

While there have been attempts to introduce broader national frameworks—most notably the so-called “One Big Beautiful Bill Act”—federal proposals have repeatedly stalled.

Earlier attempts at AI regulation, including draft federal frameworks debated in 2022 and 2023, such as the SAFE Innovation framework, also failed to progress.

This leaves businesses balancing dozens of state laws with no unifying standard.

The European Union

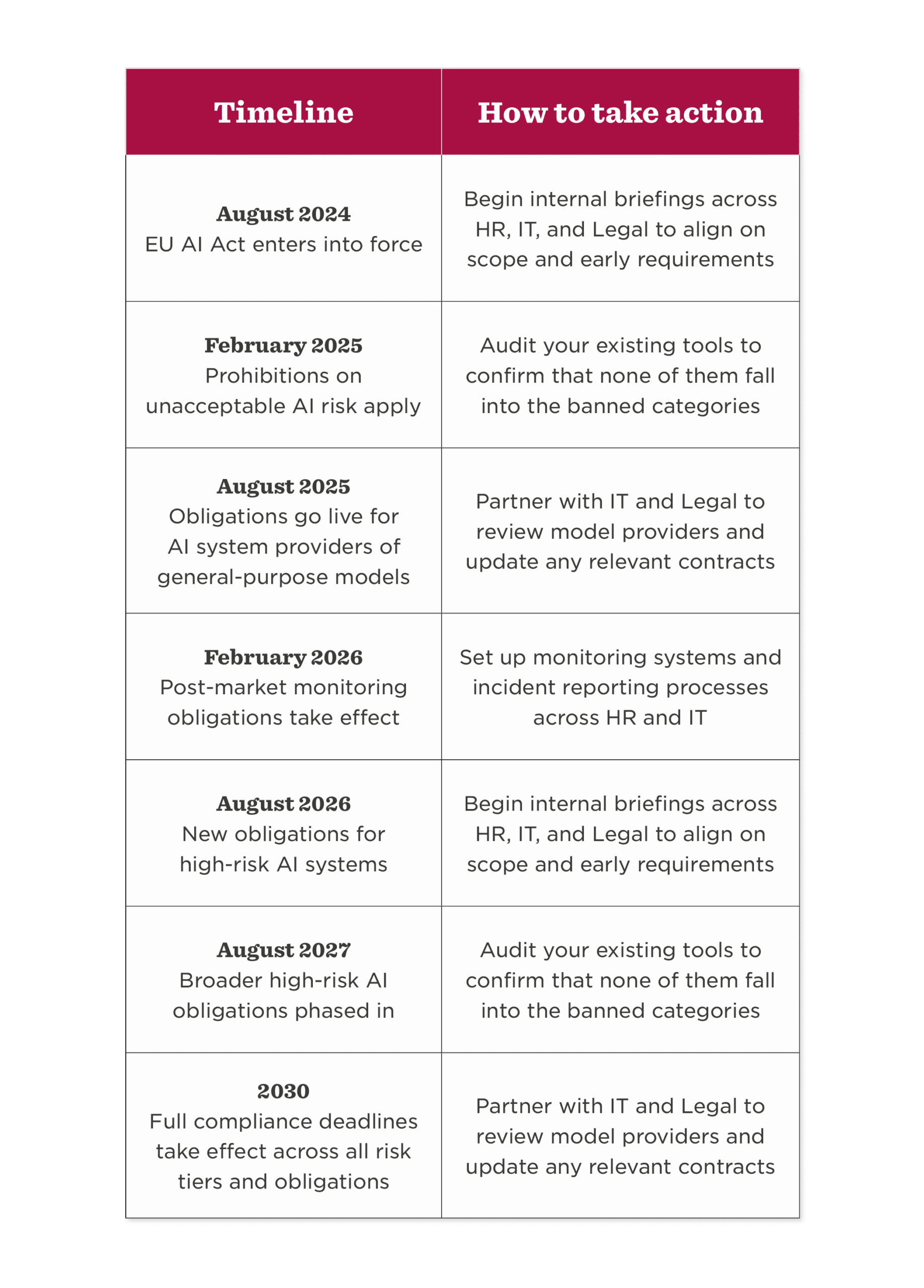

The EU AI Act took effect on August 1, 2024, with compliance deadlines phased through 2030. It’s the most comprehensive framework to date, classifying systems by risk and assigning tiered obligations.

If a business’s AI impacts people in the EU, the EU AI Act applies to them—even if the company is headquartered outside the EU.

That covers a lot. In practice, it limits fully automated hiring decisions and requires proper audit trails and transparent policies.

Some countries are pushing things even further, adding their own layers of regulation on top.

- Spain introduced an EU-aligned law with specific prohibitions, strengthening enforcement in key areas.

- Denmark is currently drafting a law focused on public sector transparency and the world’s first copyright protection law against deepfakes—a direct response to fraudulent AI-driven job applications.

- The Netherlands is drafting potential additions that may extend obligations beyond the EU Act.

- Finland doesn’t have any standalone laws in the pipeline, and none are expected as they follow the framework of the EU AI Act. However, some existing laws around automated decision-making already exist.

- France, Germany, Belgium, Norway, and Sweden are following the EU Act framework without introducing standalone laws.

Australia

Australia doesn’t yet have any binding AI law, but it’s laying the foundation.

The government has introduced a voluntary AI Safety Standard and a federal AI policy, with plans that may evolve into binding law. Alongside this, the Privacy Act has been updated to cover AI-related risks, and regulators have published ‘The 10 guardrails’ on AI safety.

Businesses can’t afford to overlook these emerging laws. Regulators already expect good‑faith compliance, and voluntary standards often become tomorrow’s legal requirements.

Acting early helps HR, IT, and Legal teams strengthen readiness, improve data practices, and show people that responsible AI use is already part of the culture.

A snapshot of global AI regulations

Decoding what AI legislation means for you

Despite regional differences, most regulations share a set of foundational principles that emphasize safe, transparent, and ethical AI use.

Understanding these helps you create a baseline for compliance and prepare across multiple jurisdictions.

- Classify risk. AI systems are grouped into tiers, from minimal to high risk. The higher the risk, the stricter the rules. HR, IT, and Legal teams work best with a clear view of where each tool falls—allowing them to plan ahead.

- Be transparent and explain outcomes. Businesses need to be clear about when AI is in use and explain outcomes in plain language. That builds trust with people and shows regulators that decisions aren’t being hidden in a black box.

- Protect data and promote fairness. Regulations reinforce the safe handling of personal data and require processes to actively prevent bias. This gives HR and IT the opportunity to work together to keep data secure while making sure systems treat everyone fairly.

- Keep humans in charge. Fully automated decisions, especially in key areas like hiring or promotion, are restricted. Laws require human oversight to review outcomes and provide avenues for any appeals.

- Be audit-ready. Documentation is a must. Organizations need clear records of how they use AI systems, what data feeds into them, and who signs off on key decisions.

Principles may set the baseline, but real compliance comes to life in daily practice. This is where monitoring AI and leveraging AI-powered HR tech come together—using the tech itself to turn regulations into practical steps that keep decisions transparent, auditable, and fair.

AI-powered people tech can help you:

- Document decisions. Record AI-supported decisions in hiring, pay, and performance to keep processes transparent.

- Create audit trails. Build role-based review checkpoints so HR, IT, and Legal can trace outcomes.

- Configure disclosures. Adapt transparency messaging by region so people understand how AI is shaping their experience.

Recommended For Further Reading

Act early: The business benefits of proactive compliance

Getting ahead of legislation is not just about minimizing risk. It’s about building trust, improving internal processes, and positioning your company as a responsible innovator.

Cross-functional impact

As AI rules take shape, compliance is no longer the job of a single team. It’s shared across the business. Every function plays a role in protecting people and performance.

- Legal. Track shifting legislation, assess risks, and manage cross-border compliance to stay ahead of new requirements.

- IT. Vet every vendor and manage data flows to ensure AI systems meet security and transparency standards so technology doesn’t become a hidden liability.

- HR. Centralize records and automate region-tagged workflows to make compliance easier to prove and keep people processes consistent across geographies.

AI-powered people tech can help you:

- Centralize dashboards. Provide leaders with one view of all AI-supported processes so nothing falls through the cracks.

- Automate tagging. Apply regional tags to workflows automatically so the system accounts for local laws.

- Generate audit-ready reports. Export compliance data HR, IT, and Legal can use to demonstrate readiness quickly.

- Share accountability. Set role-based permissions and alerts to assign ownership clearly across teams, reducing overlap and ensuring you cover every risk.

Strengthening hiring integrity

AI-generated job applications and deepfake interviews are getting harder to spot, creating fresh risks for hiring teams and their pipelines.

Act early to stay ahead. Vet AI tools, train recruiters, and build fraud detection into your workflows—before regulations make it mandatory.

AI-powered people tech can help you:

- Use bias-aware assessments. Apply bias-aware assessments to reduce manipulation and ensure consistent candidate evaluation.

- Centralize records. Store all hiring decision documentation in one place with built-in oversight steps, so audits and reviews are straightforward.

- Maintain version control. Keep up-to-date versions of AI screening criteria to trace every decision back to the right rules.

- Pilot fraud detection tools. Test technologies that detect anomalies in video interviews and flag suspicious activity before it reaches hiring managers.

Building transparency into employee experiences

As AI shapes more of the employee journey, team members deserve to know how it’s influencing their careers. Transparency is not only a legal requirement. It’s also a cultural expectation.

It’s important to communicate clearly to build trust and avoid surprises.

AI-powered people tech can help you:

- Disclose AI use. Clearly communicate where AI is embedded in people systems so team members know when it’s in play.

- Show the inputs. Give people visibility into the data or metrics driving AI-generated outcomes, turning a black box into an open process.

- Configure regional consent. Adjust consent and transparency settings by location to stay aligned with local laws and expectations.

- Build in explainability. Integrate explainability into reviews and feedback tools so people understand exactly how their performance or growth is assessed.

Minimizing legal and reputational risk

Compliance goes beyond dodging fines. A single misstep can erode trust and damage your reputation. In today’s business world, that really matters.

Being audit-ready in every region helps protect your people and brand credibility. Standardizing policies and AI usage documentation also helps to ensure consistency and proactively respond to legal updates and compliance triggers.

Together, these actions help keep your organization resilient and ready for change, and tech can make them easier to implement.

AI-powered people tech can help you:

- Centralize access. Store AI-supported records in one place so Legal can retrieve them quickly when questions arise.

- Build region-tagged workflows. Automate workflows by geography to make sure you’re applying the right local policies.

- Generate audit-ready exports. Provide quick reports that give regulators confidence and leadership peace of mind.

- Set risk alerts. Trigger alerts when workflows include high-risk AI activities, so HR and IT can intervene before small issues escalate.

How to operationalize AI compliance across your organization

Once you understand where and how AI regulations apply, the next step is turning compliance into a working system across HR, IT, and Legal.

That means identifying risks, assigning ownership, and ensuring your teams have the right tools to take action.

Map your AI use cases

Audit how AI is already influencing the employee lifecycle, from hiring and pay to productivity and reporting. Don’t forget third-party platforms or generative AI tools—they count too.

AI-powered people tech can help you:

- Create a centralized map. Build one view of all AI-powered workflows, tagged by risk and region.

- Provide visibility. Share the same data across Legal, HR, and IT to make compliance decisions faster.

Assign owners across functions

Define accountability for legal reviews, vendor checks, and workflow transparency.

AI-powered people tech can help you:

- Set role-based permissions. Establish approvals and access levels so every task has a clear owner.

- Automate accountability. Use notifications to keep processes moving and responsibilities clear.

Monitor and adapt constantly

Laws are constantly evolving. Staying compliant means continuously retraining, updating policies, and tracking enforcement activity.

AI-powered people tech can help you:

- Set alerts. Automate notifications for regulatory changes so teams can act quickly.

- Maintain audit logs. Keep records current across HR, IT, and Legal to track compliance over time and show clear progress.

Next steps to stay AI-compliant at every site

Staying AI-compliant is a critical, ongoing initiative that spans strategy, policy, and people.

These next steps help cross-functional teams align and keep compliance on track:

- Host a leadership briefing. Bring executives together to review the regional impact of AI regulations and align on top priorities. These sessions create shared accountability at the highest level and set the tone for proactive compliance.

- Assign compliance owners. Give Legal, HR, and IT clear responsibilities for every market you operate in. By assigning ownership, you reduce blind spots and make sure each region has a dedicated point of accountability.

- Catalog AI tools. Build a full inventory of every AI-powered system in use across your people workflows, both internal and third-party. Visibility is the first step toward accountability. It also helps leaders make informed decisions when laws change.

- Review key workflows. Examine hiring, pay, and performance processes to stay audit-ready. Embedding human oversight ensures critical decisions remain fair and explainable, even in highly automated systems.

- Update internal policies. Refresh policies to disclose AI use in plain language. This way, you can make it so people understand how decisions are made. Transparency builds trust and helps the organization stay aligned with any emerging requirements.

- Centralize with people systems. Use AI-powered HR tech to consolidate compliance efforts. A centralized hub makes it easier to track region-specific rules, respond to regulatory triggers, and safeguard human oversight throughout the employee journey.

Compliance as a catalyst for trust

Past attempts at sweeping AI regulation often fizzled. That left leaders unsure how to prepare.

But things are different now. Enforcement is starting, regulators are active, and laws are finally in motion.

The choice is clear: Treat compliance as box-ticking or use it to build trust, resilience, and performance.

Act early to set the standard for responsible, people-first innovation in the new era of work.

Takeaways: Global AI regulations and compliance for 2026

- AI regulation is going global. From the EU AI Act to emerging US state laws and Australia’s AI Safety Standard, 2026 will bring broader enforcement and more cross-border implications for employers.

- The EU is setting the global benchmark. The EU AI Act’s phased rollout through 2030 will shape international compliance expectations—even for companies headquartered outside Europe.

- US businesses face a patchwork of laws. With no federal AI law, companies must navigate state-by-state requirements that focus on bias prevention, transparency, and fairness in hiring and performance decisions.

- Australia’s approach is proactive but voluntary—so far. Its federal AI policy and “10 guardrails” framework are paving the way for future binding legislation, signaling a shift toward accountability and trust-building.

- Compliance is a people strategy, not just a legal one. HR, IT, and Legal teams play complementary roles in operationalizing AI oversight, ensuring ethical use, and building organizational resilience.

- AI-powered people tech drives sustainable compliance. Centralized dashboards, audit-ready reporting, and region-tagged workflows help global companies stay aligned with evolving laws while maintaining transparency and fairness.

- Early adopters will lead the future of responsible innovation. Businesses that act now—mapping AI use cases, assigning ownership, and embedding human oversight—will earn stakeholder trust and set the global standard for ethical AI.

![Global AI regulations every business needs to know [2026]](https://res.cloudinary.com/www-hibob-com/w_600,h_447,c_fit/fl_lossy,f_webp,q_auto/wp-website/uploads/Global-AI-regulations-every-business-needs-to-know_Main-image.png)